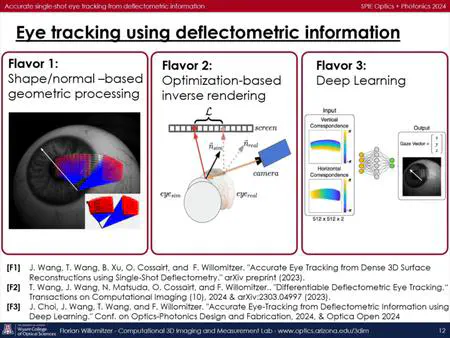

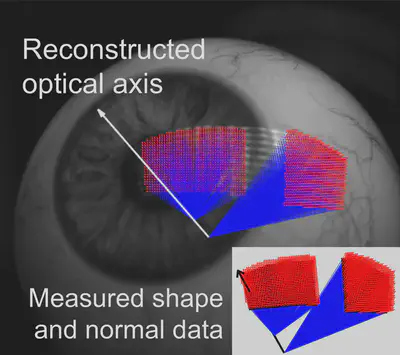

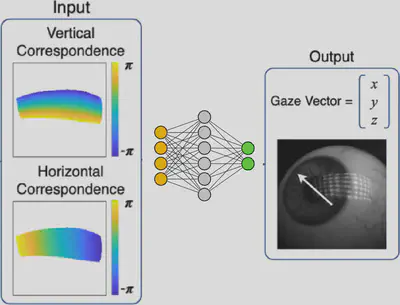

In this research track, we rethink how specular reflections can be used for eye tracking: We have developed a set of novel methods for accurate and fast evaluation of the gaze direction that utilize dense deflectometric information. Deflectometry is a well-known technique in optical 3D metrology for the measurement of specular surfaces. For the first time ever, our group has developed a family of “computational deflectometry” methods for eye tracking. The reflection of a patterned screen is observed over the specular eye surface, with the information about the gaze being encoded in the deformation of the pattern in the camera image. With “standard” screens and cameras (~1Mpix resolution), improvements in the number of acquired reflection surface points (“glints”) of factors >3000X and higher (compared to the current state-of-the-art in glint tracking) are easily achievable. The additional information allows for eye tracking at high accuracy. So far, we have explored 3 different approaches to decode the information about the gaze direction, that is encoded in the deflectometric images:

Research news featured on official University of Arizona Websites:

Inside Arizona Research (retrieved Apr. 11, 2025)

UANOW Newsletter (retrieved Apr. 11, 2025)

Tech Launch Arizona (Apr. 1 2025)

Local Radio Interviews and Reports:

Interview KVOI Radio: “The Morning Voice”

Research news featured on external websites (selection):

Phys.org: “‘Superhuman vision’: Powerful 3D imaging technology paves way for next-generation eye-tracking” (Apr. 1, 2025)

ScienceDaily: “New 3D technology paves way for next-generation eye-tracking” (Apr. 1, 2025)

Optics.org: “Arizona sharpens imaging for eye-tracking” (Apr. 1, 2025)

PHOTONICS Spectra: “Eye-Tracking Technique Based on 40K Surface Points” (Apr. 1, 2025)

Mindplex: “New eye-tracking technology boosts accuracy” (Apr. 1, 2025)

eeNews Europe: “3D imaging to significantly boost eye-tracking accuracy” (Apr. 1, 2025)

News-Medical: “Scientists achieve breakthrough in eye movement detection technology” (Apr. 1, 2025)

Mirage News: “3D Tech Revolutionizes Next-Gen Eye-Tracking” (Apr. 1, 2025)

E-Med News: “Scientists Unveil Next-Gen Eye-Tracking with Unmatched Precision” (Apr. 1, 2025)

msn: “Scientists achieve breakthrough in eye movement detection technology” (Apr. 5 2025)

all-about-industries: “Precise Eye Tracking Through Deflectometry” (Apr. 14 2025)

International News Coverage: