Computational Imaging for Art and Cultural Heritage

Computational imaging is a powerful tool for art and cultural heritage preservation and analysis, providing detailed, non-invasive and non-destructive documentation of paintings, sculptures, and even delicate objects like stained glass artworks or analog holograms, which are particularly prone to degradation. High-resolution digital (3D) models reveal surface details, structural features, and signs of wear, supporting condition monitoring and virtual restoration without risking damage.

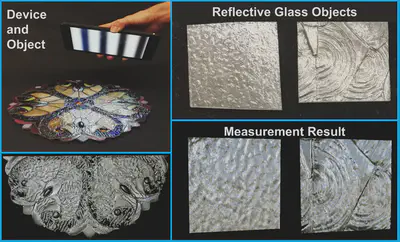

In several of our projects we have integrated these features in portable, hand-guided 3D imaging devices, which often consist only of off-the-shelf technology like ordinary mobile phones or tablets. This makes these methods more accessible to a wider user community and institutions.

Skinscan: Low-cost 3d-scanning for dermatologic diagnosis and documentation.

2021 IEEE International Conference on Image Processing (ICIP), 2021.

3d surface measurement and analysis of works of art.

2019 53rd Asilomar Conference on Signals, Systems, and Computers, 2019.

A Low-Cost Solution for 3D Reconstruction of Large-Scale Specular Objects.

Computational Optical Sensing and Imaging, 2021.

In this research track, we develop procedures to render the visual content of analog film holograms from sparse image data, which can be captured in seconds using off-the-shelf devices like mobile phones. Our approaches leverage light field rendering and Neural Radiance Fields (NeRF), a learning-based method for generating new views of complex volumetric scenes. For the latter, both our qualitative and quantitative experiments demonstrate that NeRF can generate scenes from novel viewpoints, even for captured analog holograms, and thus can digitally preserve them without complicated optical setups.

How to archive the visual contents of aging analog film holograms?.

Deutschen Gesellschaft fur angewandte Optik, 2023.

In a related concept dubbed “Flying Triangulation”, we pair a “sparse” single-shot line triangulation sensor projecting ~10 straight narrow lines with sophisticated real-time registration algorithms. The captured sparse 3D line profiles are registered to each other ‘on-the-fly’ while the sensor is free-hand guided around the object, or the object is moved in front of the sensor (see videos). The result is a dense 3D model of the object with high depth precision.

Visit the Osmin3D YouTube channel for more Videos.

Color 3D Movie of a talking face - RAW data (no post processing)

Color 3D Movie of another talking face - RAW data (no post processing)

Real-time 3D movie of a boncing ping-pong ball - RAW data (no post processing)

Real-time 3D movie of a folded paper - RAW data (no post processing). High object frequencies are preserved

How to watch a ‘3D movie’

3D movie of a talking face with unidirectional lines plus closeup - RAW data (no post processing).

3D movie of a talking face with unidirectional lines - RAW data (no post processing).

Flying Triangulation Dental Scanner

Flying Triangulation Face Scanner

3D models measured with Flying Triangulation (no post processing).

360° scan of a plaster bust.

Interview Flying Triangulation (March 2013)

German with english subtitles

AIP Conference Proceedings, 2013.

Single-Shot 3D Sensing Close to Physical Limits and Information Limits.

Dissertation, University Erlangen-Nuremberg, Published as book in the series “Springer Theses” in 2019, 2017.